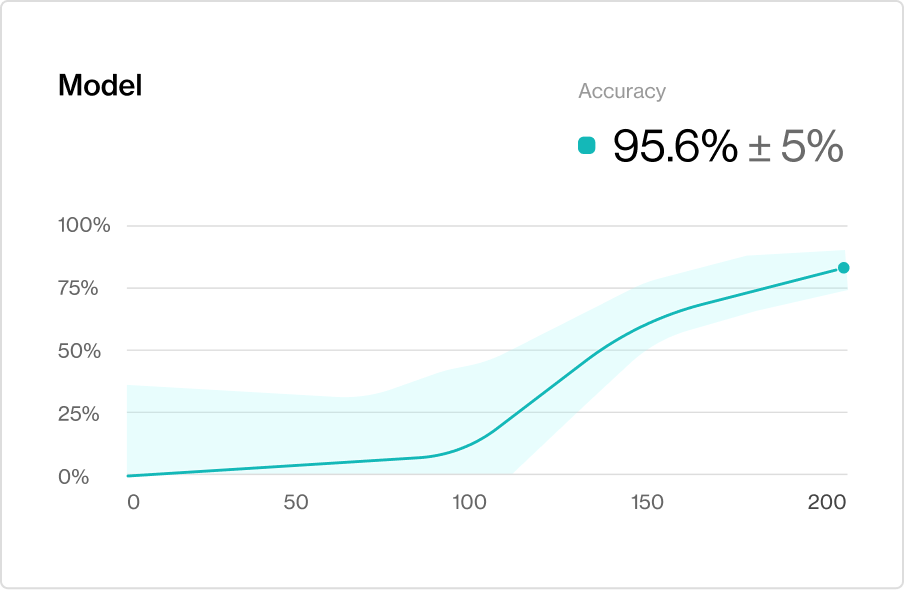

Machine learning test metrics should always be calculated with credible intervals. Credible intervals give you upper and lower bounds on test performance so you know how big your test needs to be and when to trust your models. Humanloop Active Testing can give you uncertainty bounds on your test metrics and makes this easy.